Integrating AI anomaly detection into your fintech security stack

Moving AI security from a buzzword to a practical reality requires a clear implementation plan. This ‘how-to’ delivers that plan, walking fintech security and dev teams through the five essential steps of integrating AI-driven anomaly detection. Covering everything from data centralization and model selection to the critical tuning and automated response phases, this guide serves as a technical blueprint for building a smarter, adaptive defense for your cloud-native stack.

-

Nikita Alexander

- June 9, 2025

- 5 minutes

For a modern fintech, agility is everything. Your cloud-native infrastructure, microservices architecture, and continuous deployment pipelines enable you to innovate at speed. But this dynamic environment also creates a complex and ever-changing attack surface that traditional, rules-based security tools struggle to protect.

Static firewall rules and signature-based alerts can’t keep up with ephemeral containers or novel API abuse tactics. The solution is to adopt a security model that learns and adapts with you. This is the power of AI-driven anomaly detection.

Instead of just looking for known threats, these systems learn what constitutes normal behavior within your unique environment and flag any deviation that could indicate a threat. This guide provides a practical, step-by-step process for security architects, developers, and DevSecOps teams looking to integrate AI anomaly detection into their security stack.

Laying the groundwork

Jumping straight into technology procurement is a recipe for failure. A successful integration starts with clear objectives.

-

Step 0a: define your protection goals

Be specific. What are you trying to achieve? Your goals will determine what data you need and how you measure success. Examples include:

-

- “Reduce fraudulent payment transactions by 50%.”

- “Detect API abuse and credential stuffing attacks in real-time.”

- “Identify compromised employee accounts before significant data exfiltration occurs.”

-

Step 0b: map your data sources

AI is powered by data. You need to know where your most valuable data lives. For a typical fintech, this includes:

-

- Cloud infrastructure logs (e.g., AWS CloudTrail, Azure Activity Log)

- Application and microservice logs

- API gateway logs

- User authentication events

- Transaction databases

With clear goals and a data map, you’re ready to begin the technical integration.

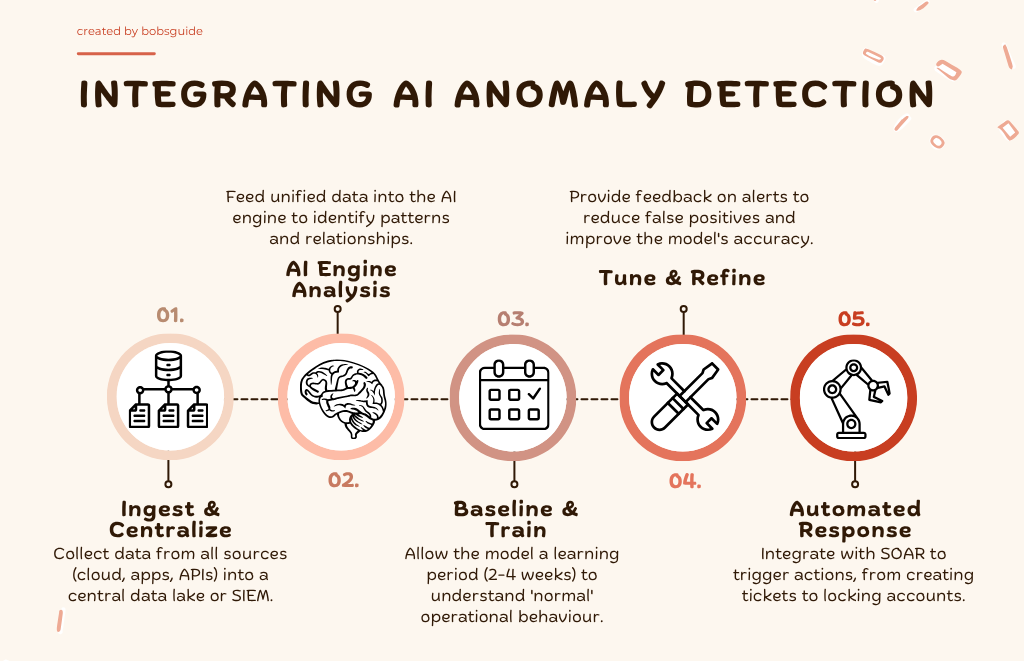

The 5 seps to integration

Step 1: centralize your data ingestion

Your data sources are scattered. To be effective, your AI model needs a single, unified view. You must create a pipeline to pull logs from all your sources into a central repository.

- Action: Deploy log shippers (like Fluentd, Vector, or Logstash) to collect data from your various services.

- Best Practice: Stream this data into a security data lake or a modern SIEM that can handle large volumes of unstructured data. Ensure data is parsed and normalized into a consistent format (like JSON) to make it easily machine-readable.

Step 2: select your anomaly detection engine

You have two main paths: build or buy. For the vast majority of fintechs, buying a specialized solution is the most practical path.

- Option A: ‘Buy’ (Recommended): Leverage a commercial or open-source platform that has pre-built ML models for security.

- Commercial Vendors: Platforms from cybersecurity firms specialize in this, offering sophisticated models and support.

- Cloud-Native Tools: Services like Amazon GuardDuty or Microsoft Sentinel have powerful, built-in anomaly detection capabilities that are easy to enable if you’re already in their ecosystem.

- Option B: ‘Build’: If you have a mature data science team, you can build custom models using libraries like TensorFlow. This offers maximum customization but is a massive undertaking requiring deep expertise in both security and MLOps.

Step 3: the baselining and training period

This is the most critical phase. Once your engine is connected to your data, it enters a learning mode to establish your organization’s unique “rhythm of business.”

- Action: Allow the system to run unimpeded for a period, typically 2-4 weeks. During this time, it observes user activity, network traffic, and API calls to learn what is normal.

- Best Practice: Ensure the data fed during this period is representative of your typical operations. Avoid running unusual activities like large-scale penetration tests during the initial baselining, as this can skew the model’s perception of “normal.”

Step 4: tune the model and refine alerts

Out of the box, no AI is perfect. Your initial output will include some false positives. The goal of this step is to teach the model what it got right and wrong, making it progressively smarter.

- Action: Your security team must regularly review the alerts generated by the system. Use the platform’s interface to provide feedback on the alerts (e.g., marking an alert as a “true positive” or “expected behavior”).

- Best Practice: Start with broader alerting rules and gradually increase the sensitivity as the model’s accuracy improves. This helps manage the initial alert volume and prevents your team from being overwhelmed.

Step 5: integrate detection with automated response

Detection without response is just noise. The real power of AI is realized when you connect its alerts to an automated workflow.

- Action: Connect your anomaly detection engine’s outputs to your SOAR (Security Orchestration, Automation, and Response) platform, ticketing system (Jira), or messaging channel (Slack/Teams).

- Best Practice: Create tiered response playbooks. For example:

- Low-Severity Anomaly: Automatically create a low-priority ticket for analyst review.

- High-Severity Anomaly (e.g., suspected account takeover): Trigger a SOAR playbook to temporarily restrict the user’s permissions, require step-up authentication, and send a high-priority alert to the on-call security engineer.

A living defense

Integrating AI anomaly detection is not a one-time project; it’s the foundation of a living, adaptive security posture. As your fintech adds new features, services, and users, your security model will need to be continuously monitored and retrained. By following these steps, you can build a sophisticated, proactive defense that enables your business to innovate securely and stay ahead of emerging threats.

Bobsguide is a

Bobsguide is a